New app lets police identify suspects on the street

getty images

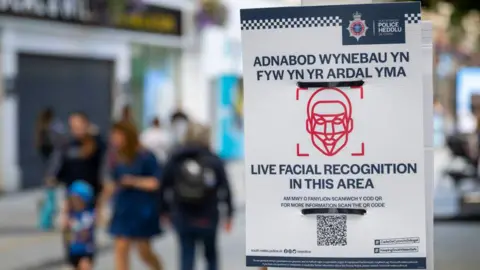

getty imagesThere are concerns that human rights will be breached as police forces in Wales become the first in the UK to launch a facial recognition app.

This would allow officers to use their phone to confirm someone’s identity.

It can be used on people who are dead or unconscious, as well as people who are unable or refuse to give details.

Jake Herfert of the civil liberties and privacy group Big Brother Watch said the app “creates a dangerous imbalance between the public’s rights with police powers”.

The app, known as Operator Initiative Facial Recognition (OIFR), has already been trialled by 70 officers in South Wales and will be used by South Wales Police and Gwent Police.

Police said its use on unconscious or dead people will help officials identify them quickly so that they can reach their families with care and compassion.

In cases where someone is wanted for a criminal offence, the forces said this will secure their quick arrest and custody.

The police also said that cases of mistaken identity would be solved easily without the need to go to the police station or detention room.

Police said photographs taken using the app will not be retained, and photographs taken at private locations such as homes, schools, medical facilities and places of worship will only be used in situations involving the risk of significant harm.

But Mr Herfert said: “In the UK, none of us should have to reveal our identity to the police without a good reason, but this unregulated surveillance technology threatens to take away that fundamental right.”

Charlie Whelton of Liberty, another civil liberties group, said the technology was a “deeply offensive violation of our privacy rights, data protection laws and equality laws”.

“We urgently need the government to implement safeguards to protect us, rather than allowing police to continue experimenting at the expense of our civil liberties as we go about our daily lives.”

What is facial recognition?

The software takes a “probe image”, usually a face captured from CCTV or a mobile phone, and measures facial features – our biometric data.

This is then compared with all the custody images in a database shared by police forces.

In August 2020, the Court of Appeal ruled that The use of automatic facial recognition (AFR) technology by South Wales Police is illegal Following a legal challenge by civil rights group Liberty and Ed Bridges.

But the court also found that its use was a proportionate interference with human rights because the benefits outweighed the impact on Mr Bridges.

Mr Bridges said he was troubled by being identified by the AFR.

What do police use facial recognition for?

Assistant Chief Constable Trudy Meyrick of South Wales Police said the new app is able to increase the police’s “ability to accurately confirm a person’s identity”.

He said: “This technology does not replace traditional methods of identifying people and our police officers will only use it in cases where doing so is both necessary and proportionate, with the aim of keeping that particular person or the wider public at bay , safe.”

Assistant Chief Constable Nick McLean of Gwent Police called the adoption of technology “integral to effective policing and public safety”.

“The use of this technology always involves human decision-making and oversight to ensure it is used legally, ethically, and in the public interest,” he said.

“We have a robust investigative process in place to ensure accountability and found no evidence of racial, age or gender bias in testing.”